[Updates to the Article and Codebase / Code Snippets ~ 17/Feb/2021]

- Fixed Possible Con. Leaks in Network Connections

- Fixed Poor Code and Bad Programming Practices

- Improved Code Formatting, Practiced Clean Code*

- Mowglee v0.02a is Released (Previously, v0.01a')

To do this, you should have intermediate to expert level core Java skills, an understand of the intricacies of multi-threading in Java, and an understand of the real-world usage of and need for web crawlers.

What You Will Learn

How to write simple and distributed node-based web crawlers in core Java.

How to design a web crawler for geographic affinity.

How to write multi-threaded or asynchronous task executor-based crawlers.

How to write web crawlers that have modular or pluggable architecture.

- How to analyze multiple types of structured or unstructured data. (Covered Minimally)

Geo Crawling

main() method, but they were written only for unit testing.in.co.mowglee.crawl.core.Mowglee. You can also run the bundled JAR file under dist using java –jar mowglee.jar . If you are using JDK 6 for execution (recommended), then you can use jvisualvm for profiling.

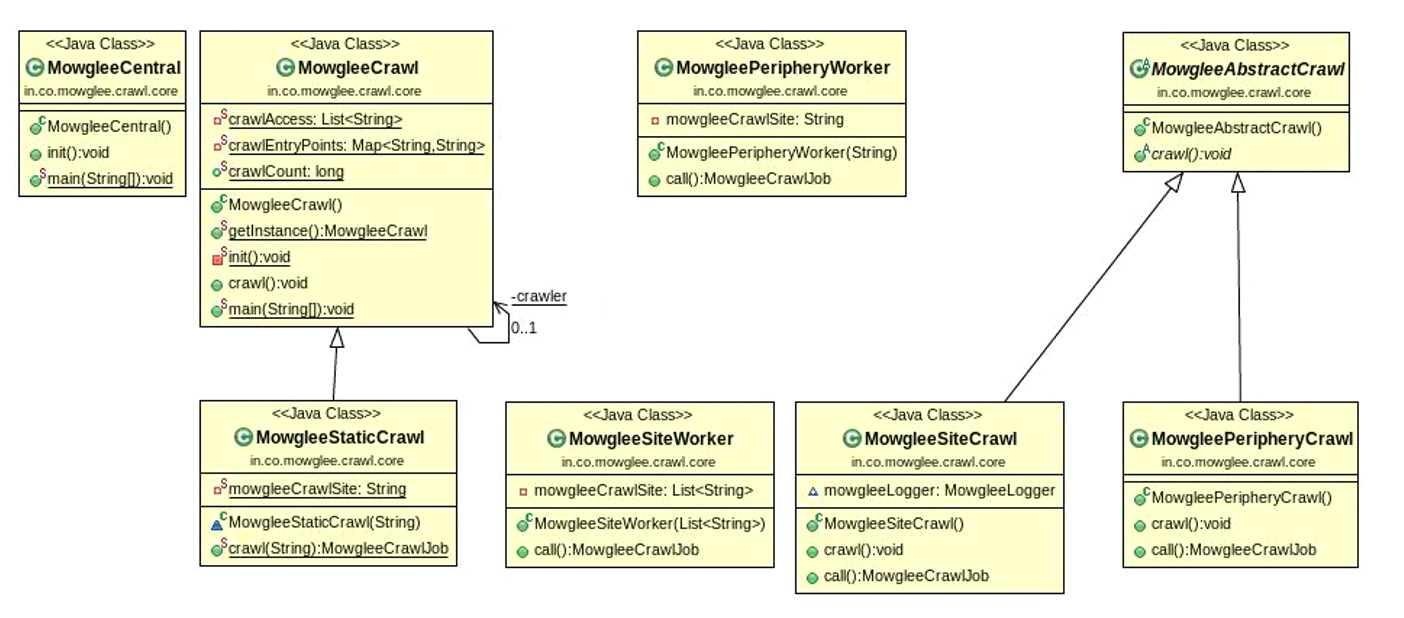

MowgleeCrawl is a class that sequentially invokes all crawl types in Mowglee: static crawling, periphery crawling, and site crawling.MowgleeStaticCrawl is the starting class for the crawling process. This will read the static geographical home page or crawl home. You may configure multiple crawl homes for each geography and start a MowgleeStaticCrawl process for each of them. You can visualize this as a very loose representation of a multi-agent system. There is a default safe waiting period of ten seconds that can be configured as per your needs. This is to make sure that all data is available from other running processes or other running threads before we begin the main crawl.MowgleePeripheryCrawl is the pass one crawler that deduces the top-level domains from a given page or hyperlink. It is built with the sole purpose of making MowgleeSiteCrawl (Pass 2) easier and more measurable. The periphery crawl process can also be used to remove any duplicate top-level domains across crawls for a more concentrated effort for the next pass. In Pass 1, we only concentrate on the links and not the data.MowgleeSiteCrawl is the Pass 2 crawler for instantiating individual thread pools using the JDK 6 executor service for each MowgleeSite. The Mowglee crawl process at this stage is very extensive and very intrusive in terms of the type of data to detect. In Pass 2, we classify link types as protocol, images, video, or audio, and we also try to gain information on metadata for the page. The most important aspect of this phase is that we do analysis in a dynamic yet controlled fashion, as we try to increase the thread pool size as per the number of the pages on the site.MowgleePeripheryWorker. For Pass 2, the work of reading and analyzing links is done by MowgleeSiteWorker. The helper classes that it uses during this includes data analyzers, as explained later.MowgleePeripheryWorker uses MowgleeDomainMap for storing the top-level domains. The most important lines of code are in MowgleeUrlStream and are used to open a socket to any given URL and read its contents, which are provided below. public StringBuffer read(String httpUrl, String crawlMode) {

StringBuffer httpUrlContents = new StringBuffer();

InputStream inputStream = null;

InputStreamReader inputStreamReader = null;

MowgleeLogger mowgleeLogger = MowgleeLogger.getInstance("FILE");

// check if the url is http

try {

if (crawlMode.equals(MowgleeConstants.MODE_STATIC_CRAWL)) {

mowgleeLogger.log("trying to open the file from " + httpUrl, MowgleeUrlStream.class);

inputStream = new FileInputStream(new File(httpUrl));

inputStreamReader = new InputStreamReader(inputStream);

} else {

mowgleeLogger.log("trying to open a socket to " + httpUrl, MowgleeUrlStream.class);

inputStream = new URL(httpUrl).openStream();

inputStreamReader = new InputStreamReader(inputStream);

}

// defensive

StringBuffer urlContents = new StringBuffer();

BufferedReader bufferedReader = new BufferedReader(inputStreamReader);

String currentLine = bufferedReader.readLine();

while (currentLine != null) {

urlContents.append(currentLine);

currentLine = bufferedReader.readLine();

}

if (httpUrl != null & httpUrl.trim().length() > 0) {

MowgleePageReader mowgleePageReader = new MowgleePageReader();

mowgleePageReader.read(httpUrl, urlContents, crawlMode);

mowgleeLogger.log("the size of read contents are " + new String(urlContents).trim().length(),

MowgleeUrlStream.class);

}

// severe error fixed - possible memory leak in case of an exception! - [connection leak fixed]

// inputStream.close();

} catch (FileNotFoundException e) {

mowgleeLogger.log("the url was not found on the server due to " + e.getLocalizedMessage(),

MowgleeUrlStream.class);

} catch (MalformedURLException e) {

mowgleeLogger.log("the url was either malformed or does not exist", MowgleeUrlStream.class);

} catch (IOException e) {

mowgleeLogger.log("an error occured while reading the url due to " + e.getLocalizedMessage(),

MowgleeUrlStream.class);

} finally {

try {

// close the connection / file input stream

if (inputStream != null)

inputStream.close();

} catch (IOException e) {

mowgleeLogger.log("an error occured while closing the connection " + e.getLocalizedMessage(),

MowgleeUrlStream.class);

}

}

return httpUrlContents;

}

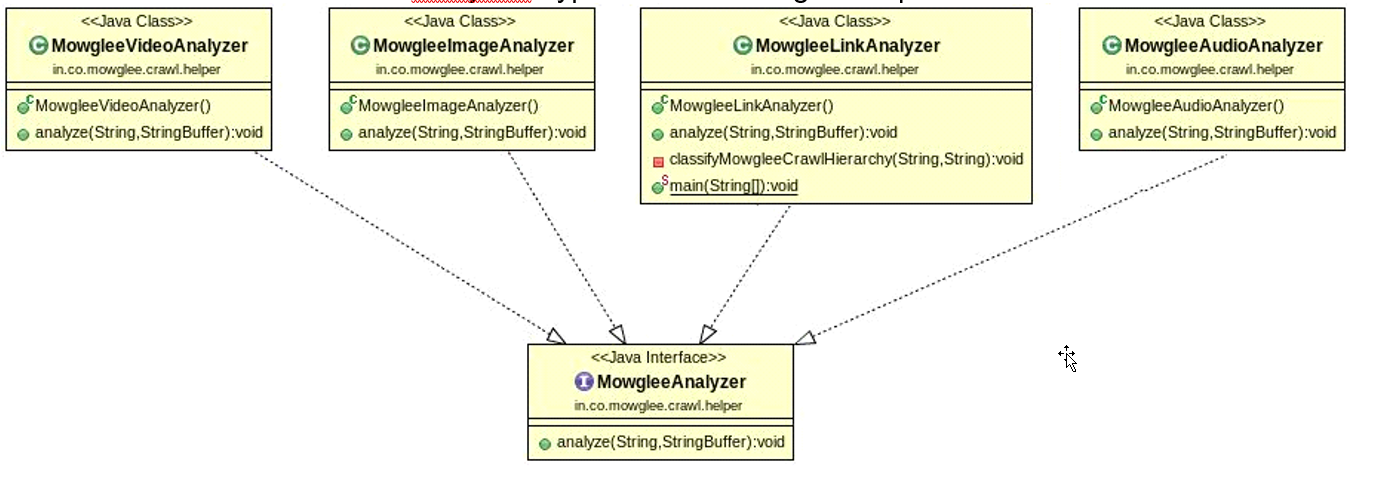

MowgleeLinkAnalyzer. It uses MowgleeSiteMap as the memory store for all links within a given top-level domain. It also maintains a list of visited and collected URLs from all the crawled and analyzed hyperlinks within a given top level domain.

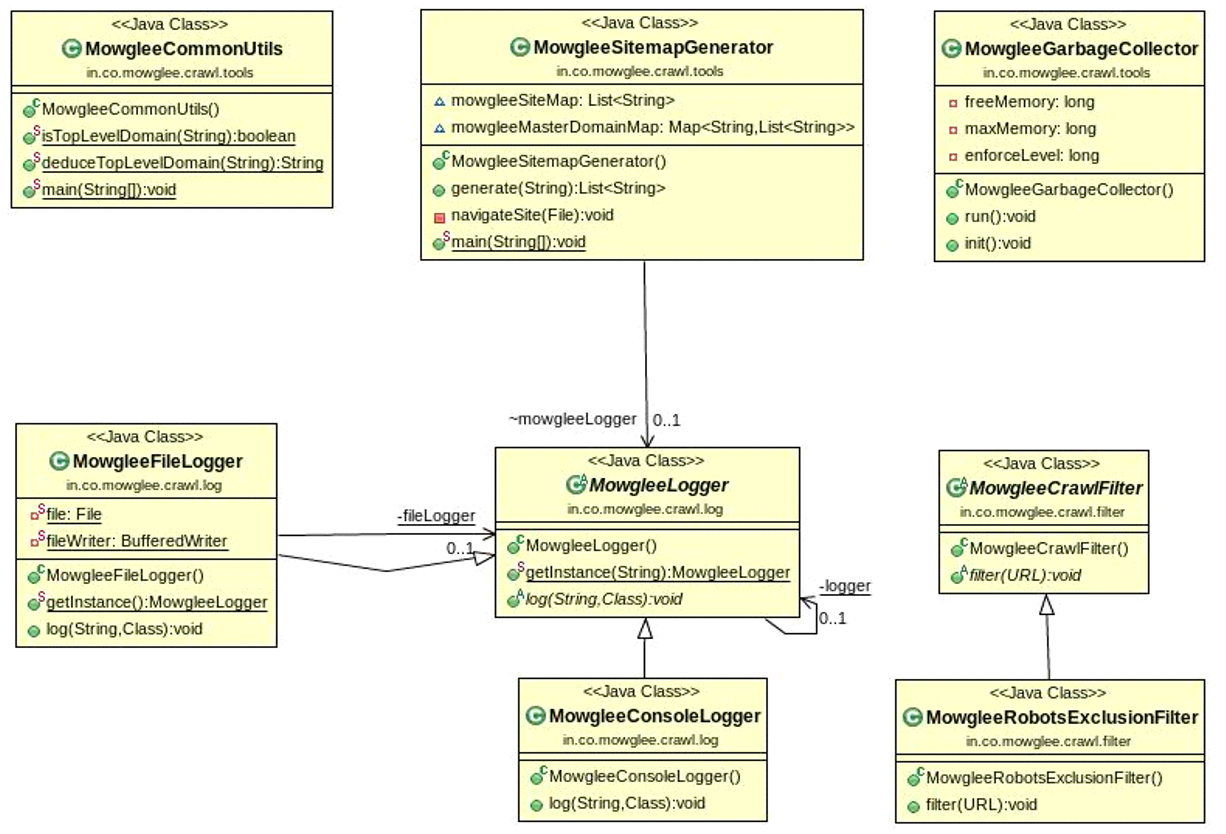

MowgleeGarbageCollector is a daemon thread that is instantiated and started at the time of running the main application. As there are a large number of objects instantiated per thread, this thread tries to control and enforce the internal garbage collection mechanism within safe limits of memory usage. MowgleeLogger provides the abstract class for all types of loggers in Mowglee. Also, there is an implementation of RobotsExclusionProtocol provided under MowgleeRobotsExclusionFilter. This inherits from MowgleeCrawlFilter. All other types of filters that are closer to the functioning of a crawler system may extend from this particular class.MowgleeCommonUtils provides a number of common helper functions, such as deduceTopLevelDomain(). owgleeSitemapGenerator is the placeholder class for generating the sitemap as per the sitemaps protocol, and a starting point for a more extensive or custom implementation. The implementations for analyzing images, video, and audio can be added. Only the placeholders are provided along.Applications

Website Governance and Government Enforcements

Ranking Sites and Links

Analytics and Data Patterns

Advertising on the Basis of Keywords

Enhancements

Store Using Graph Database

MowgleePersistenceCore class.Varied Analyzers

MowgleeAnalyzer , and you can refer to MowgleeLinkAnalyzer to understand the analyzer's implementation.Focused Classifiers

You can add a hierarchy of classifiers or plugins to automatically classify the data based on terms, keywords, geographic lingo, or phrases. An example is MowgleeReligiousAbusePlugin.

Complex Deduction Mechanisms

Sitemap Generation

Conclusion

async mechanism and makes sure that all links that may be not reachable from within a site to itself are also crawled. Mowglee does not have a termination mechanism currently. I have provided a placeholder for you to decide as per your usage. This could be based on the number of top level domains crawled, data volume, number of pages crawled, geography boundaries, types of sites to crawl, or an arbitrary mechanism.mowglee.crawl file to read the crawl analysis. There is no other storage mechanism provided. You may keep this file open in an editor like EditPlus to continuously monitor its contents.